Table of Contents

Data Access

The Data Access module provides the fundamental layer for applications that handle spatial data from different sources, ranging from traditional DBMSs to OGC Web Services. This module is composed by some base abstract classes that must be extended to allow the creation of Data Access Drivers which actually implement all the details needed to access data in a specific format or system.

This module provides the base foundation for an application discover what is stored in a data source and how the data is organized in it. Keep in mind that this organization is the low-level organization of the data. For instance, in a DBMS, you can find out the tables stored in a database and the relationships between them or detailed informations about their columns (data type, name and constraints).

It is not the role of this module to provide higher-level metadata about the data stored in a data source. This support is provided by another TerraLib module: Spatial Metadata module.

This section describes the Data Access module in details.

Design

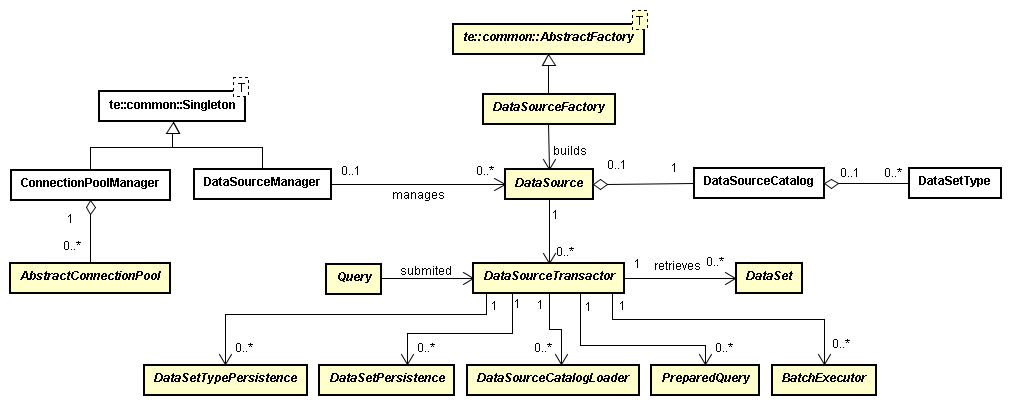

As one can see in the class diagram below, the Data Access module provides a basic framework for accessing data. It is designed towards extensibility and data interoperability, so you can easily extend it with your own data access implementation.

The requirements that drove this design were:

- extensible data formats/access: the API must be flexible enough to allow new data source driver implementations for new data formats.

- data type extension: it must be possible to add new data types to the list of supported ones. The API must provide a way for developers to access new data types that exist only in particular implementations. The new data types can be added to all data source drivers or just for part of them. This will enable the use of extensible data types available in all object-relational DBMS.

- query language extension: it must be feasible to add new functions to the list of supported operations in the query language of a specific driver.

- dynamic linking and loading: new drivers can be added without the need of an explicit linking. The data access architecture must support dynamic loading of data source driver modules, so that new drivers can be installed at any time, without any requirement to recompile TerraLib or the application.

The yellow classes with the names in italic are abstract and must be implemented by data access drivers. Following we discuss each class in detail.

DataSource

The DataSource class is the fundamental class of the data access module and it represents a data repository. For example, it may represent a PostgreSQL database, an Oracle database, an OGC Web Feature Service, a directory of ESRI shape-files, a single shape-file, a directory of TIFF images, a single TIFF image or a data stream. Each system or file format requires an implementation of this class.

A data source shows the data contained in it as a collection of datasets (DataSet). The information about the data that is stored in a data source may be available in the data source catalog (DataSourceCatalog). For each dataset the catalog keeps information about its name and structure/schema (DataSetType).

Besides the descriptive information about the underlying data repository each data source also provides information about its requirements and capabilities. This information may be used by applications so that they can adapt to the abilities of the underlying data source in use.

Each data source driver must have a unique identifier. This identifier is a string (in capital letters) with the data source type name and it is available through the method getType. Examples of identifiers are: POSTGIS, OGR, GDAL, SQLITE, WFS, WCS, MYSQL, ORACLE, SHP, MICROSOFT_ACCESS.

A data source is also characterized by a set of parameters that can be used to set up an access channel to its underlying repository. This information is referred as the data source connection information. This information may be provided as an associative container (a set of key-value pairs) through the method setConnectionInfo. The key-value pairs (kvp) may contain information about maximum number of accepted connections, user name and password required for establishing a connection, the url of a service or any other information needed by the data source to operate. The parameters are dependent on the data source driver. So, please, check the driver documentation for any additional information on the supported parameters. For instance, in a PostGIS data source it is usual to use the following syntax:

std::map<std::string, std::string> connInfo; pgisInfo["PG_HOST"] = "atlas.dpi.inpe.br" ; // or "localhost"; pgisInfo["PG_PORT"] = "5433" ; pgisInfo["PG_USER"] = "postgres"; pgisInfo["PG_PASSWORD"] = "xxxxxxx"; pgisInfo["PG_DB_NAME"] = "terralib4"; pgisInfo["PG_CONNECT_TIMEOUT"] = "4"; pgisInfo["PG_CONNECT_TIMEOUT"] = "4"; pgisInfo["PG_CLIENT_ENCODING"] = "CP1252";

For a WFS data source available at http://www.dpi.inpe.br/wfs the connection string could be:

std::map<std::string, std::string> connInfo; connInfo["URI"] = "http://www.dpi.inpe.br/wfs";

The method getConnectionInfo returns an associative container (set of key-value pairs) with the connection information.

Another useful information available in a data source is its known capabilities. The method getCapabilities returns all information about what the data source can perform. Here you will find if the data source implementation supports primary keys, foreign keys, if it can be used in a thread environment and much more information. There is a list of common key-value pairs that every data access driver must supply although each implementation can provide additional information.

A data source can be in one of the following states: opened or closed. In order to open a data source and makes it ready for use, one needs to provide the set of parameters required to set up the underlying access channel to the repository and then call one of the open methods. These methods will prepare the data source to work. If the implementation needs to open a connection to a database server, or to open a file or to get information from a Web Service, these methods can do this kind of job in order to prepare the data source to be in an operational mode. As one can see, you can use an associative container with the connection information or a string in a kvp notation.

Once opened, the data source can be closed, releasing all the resources used by its internal communication channel. The close method closes any database connection, opened files or resources used by the data source.

You can inquire the data source in order to know if it is opened or if it still valid (available for use) using the methods isOpened and isValid accordingly.

The data stored in a data source may be discovered using the DataSourceTransactor and DataSourceCatalogLoader. The developer may also cache the description of datasets available in the data source in its catalog (DataSourceCatalog). The method getCatalog gives you the access to the cached data description in the data source. All drivers must assure a non-NULL data source catalog when the getCatalog method is called, although it can be empty.

In order to interact with the data source you need a transactor. The getTransactor method returns an object that can execute transactions in the context of a data source. You can use this method to get an object that allows to retrieve a dataset, to insert data or to modify the schema of a dataset. You don't need to cache this kind of object because each driver in TerraLib already keeps a pool. So as soon as you finish using the transactor, destroy it. For more information see the DataSourceTransactor class.

A data source repository can be created using the create class method. All you need is to inform the type of data source (providing its type name, for example: POSTGIS, ORACLE, WFS) and the creational information (a set of key-value pairs). Note that after creation the data source is in a “closed state”. The caller will have to decide when to open it. Not all drivers may perform this operation and it must be checked in the capabilities.

As you can create a new data source, you can also drop an existing one, through the drop class method. This command will also remove the data source from the data source manager (if it is stored there). Not all drivers may perform this operation and it must be checked in the capabilities.

DataSourceFactory

All data access drivers must register a concrete factory for building data source objects. The class DataSourceFactory is the base abstract factory from which all drivers must derive their concrete factories. The following diagram shows that PostGIS, SQLite, OGR and GDAL drivers supply concrete factories derived from this base class:

You can use the DataSourceFactory to create a DataSource object as in the code snippet below, where a data source to an ESRI shape-file is created:

// this is the connection info when dealing with a single shape-file std::map<std::string, std::string> ogrInfo; ogrInfo["URI"] = "./data/shp/munic_2001.shp"; // use the factory to create a data source capable of accessing shape-file data std::auto_ptr<te::da::DataSource> ds = te::da::DataSourceFactory::make("OGR"); // set the connection info and open the data source ds->setConnectionInfo(ogrInfo); ds->open(); ... // operate on the data source

And the code snippet bellow shows how to access a set of GeoTIFF images stored in a file system folder:

// this is the connection info when dealing with a directory of raster files std::map<std::string, std::string> gdalInfo; gdalInfo["SOURCE"] = "./data/rasters/"; // use the factory to create a data source capable of accessing image files std::auto_ptr<te::da::DataSource> ds = te::da::DataSourceFactory::make("GDAL"); // set the connection info and open data source to make it ready for use ds->setConnectionInfo(gdalInfo); ds->open(); ... // operate on the data source

Remember that you can also use an associative container to inform the DataSource connection parameters. The code snippet below shows how to open a PostGIS data source using an associative container with all connection parameters:

// use the factory to create a data source capable of accessing a PostgreSQL with the PostGIS extension enabled std::auto_ptr<te::da::DataSource> ds = te::da::DataSourceFactory::make("POSTGIS"); // a map from parameter name to parameter value std::map<std::string, std::string> connInfo; connInfo["PG_HOST"] = "atlas.dpi.inpe.br" ; // or "localhost"; connInfo["PG_USER"] = "postgres"; connInfo["PG_PASSWORD"] = "xxxxxxxx"; connInfo["PG_DB_NAME"] = "mydb"; connInfo["PG_CONNECT_TIMEOUT"] = "4"; connInfo["PG_CLIENT_ENCODING"] = "WIN1252"; //or "LATIN1" connInfo["PG_PORT"] = "5432"; // set the connection info and open the data source to make it ready for use ds->setConnectionInfo(connInfo); ds->open(); ... // operate on the data source

DataSourceManager

The DataSourceManager is a singleton designed for managing all data source instances available in a system. It keeps track of the data sources available and it is the preferred way for creating or getting access to a data source. An application can choose any strategy to label and identify data sources, for example, using a descriptive title provided by the user or using an unique-universal-identifier (uuid).

Prefer using the methods from this singleton instead of using the data source factory because it keeps track of the data sources available in the system. The query processor relies on it in order to do its job.

DataSet

The DataSet class represents the fundamental unit of information transferred between a physical data source and applications at the data access level. When retrieving data from a DBMS it may represent a table of data or the result of a query generated by executing an SQL statement; for an OGC Web Service it may encapsulate a GML document with vector data or a raster in a specific image format. One interesting feature of the dataset is its uniform view of both vector and raster data.

A dataset may be defined recursively, containing other datasets. This design allows implementations to have a dataset as a collection of other datasets.

As a data collection, a dataset has an internal pointer (or cursor) that marks the current item of the collection. The move methods (ex: moveNext, movePrevious, moveLast, moveFirst) set this internal pointer.

When a dataset is first created its internal pointer points to a sentinel location before the first item of the collection. The first call to moveNext sets the cursor position to the first item. By using the move methods you can retrieve the attributes of the elements in a dataset. The elements can be taken all at once as a dataset item (see DataSetItem class).

By default, dataset objects is not updatable and has a cursor pointer that moves forward only. If the dataset is created as forward only, you can iterate through it only once and only from the first item to the last one. It is possible to get dataset objects that are random and/or updatable. Remember that data sources drivers expouses its dataset capabilities and applications must rely on this information to decide how to create the dataset.

A dataset may have any number of attributes whose values can be retrieved by invoking the appropriated methods (getInt, getDouble, getGeometry, …). The attributes can be retrieved by the attribute index (an integer value) or by the name of the attribute (an string value). It is possible to query the associated dataset schema (DataSetType) to know the right type of each attribute to know what is the method suitable for retrieving data.

If a wrong method is used for retrieving the attribute in a given position the result is unpredictable. For example, if the attribute type is an integer and the method getString is used the system can crash.

As one can see in the class diagram, geometric and raster properties are treated just like any other attribute. Both have a property name and an index that can be used when retrieving data using the getGeometry or getRaster methods.

The dataset class provide another method called getWKB for retrieving the geometry in its raw form. Sometimes, this can be useful for speeding up some operations.

Our design also allows a dataset to have multiple geometric or raster attributes. It is also valid to provide a dataset that has raster and vector geometries. This allows application to take the most from modern DBMS wich provide spatial extensions with both type of data (vector and raster).

When more than one geometry or raster attributes exists, one is marked as the default (see the getDefaultGeomProperty of the dataset's DataSetType).

As an abstract class, data access drivers must implement this class.

In resume:

- There is some modifiers associated to a dataset that affects its traversal and if it can be updated.

- The

getTraverseTypemethod tells how one can traverse the dataset. Possible values area:FORWARDONLYandBIDIRECTIONAL. If a dataset is marked as forward only, it is able only to move forward. When the dataset is marked as bidirectional, it is able to move forward and backward. - The

getAccessPolicyreturns the read and write permission associated to the dataset. - If you want to know the structure of the elements of the dataset you can use the

getTypemethod to know the description of it. If you need the maximum information of a dataset, you can load its full description of the dataset (keys, indexes and others) through the methodloadTypeInfo. - If a dataset takes part of another one, you can access its parent dataset using

getParentmethod. - It is possible to compute in a dataset the bounding rectangle of all elements of a given geometric property (

getExtent). - You can also install spatial filters to refine the dataset data. The

setFiltermethods set a new spatial filter when fetching datasets. Only datasets that geometrically satisfy the spatial relation with geometry/envelope will be returned. - The DataSet class provides getter methods (getChar, getDouble, getBool, and so on) for retrieving attribute values from the current item.

- Values can be retrieved using either the index number of the attribute (numbered from 0) or its name. Take care about using attribute names as input for getter methods:

- attribute names are case sensitive.

- in general, using the attribute index will be more efficient.

- relying on the attribute name may be harmful in some situations where there may be several attributes with the same name. In this case, the value of the first matching attribute will be returned.

- the use of attribute names is recommended when they were used in the SQL query that generated the dataset. For attributes that are not explicitly named in the query (using the AS clause), it is safer to use attribute index.

- The dataset interface also provides a set of setter methods (updater) that can be used to write values in the dataset, and automatically updating the written values in the data source. Not all data source drivers provide this ability. See the data source capabilities for more information on this topic.

- As one can see, there are a lot of getter and setter methods for the most common C++ types and geo-spatial types. We call these methods methods for native datatypes and they are provided for maximal performance. There are also methods that can be used to enhance the application, by adding new datatypes provided for extensibility (

getValueandsetValue).

DataSetType

A DataSetType describes a DataSet schema. Every dataset has a corresponding dataset type that describes its list of attributes, including their names, types and constraints. The information about each attribute of a dataset is stored as an instance of the Property class. The DataSetType may be associated to foreign keys, primary keys, indexes, unique constraints and check constraints.

Constraint

The Constraint class describes a constraint over a DataSetType. It is a base class for other concrete type of constraints: primary key, foreign key, unique key and check constraint.

PrimaryKey

A PrimaryKey describes a primary key constraint. It may keeps a pointer to an associated index and the list of properties that take part of primary key constraint.

ForeignKey

The ForeignKey class models a foreign key constraint for a given DataSetType. It keeps a pointer to the referenced DataSetType, the list of properties that are part of the foreign key constraint and the list of referenced properties (on the referenced DataSetType) of the foreign key constraint. The type of action performed on the foreign key data may be:

- NO_ACTION: it indicates that the underlying system must produce an error indicating that the deletion or update of attributes would create a foreign key constraint violation.

- RESTRICT: it indicates that the underlying system must produce an error indicating that the deletion or update would create a foreign key constraint violation.

- CASCADE: tells to the underlying system to automatically delete any rows referencing the deleted row, or update the value of the referencing column to the new value of the referenced column, respectively.

- SET_NULL: set the referencing column(s) to null.

- SET_DEFAULT: set the referencing column(s) to their default values.

UniqueKey

A UniqueKey describes a unique key constraint. It keeps a pointer to an associated index and the list of properties that are part of the unique key constraint.

CheckConstraint

A CheckConstraint describes a check constraint. It is represented by an expression that imposes a restriction to the dataset.

Index

The Index class describes an index associated to a DataSetType. It keeps the list of properties that take part of the index. The Index type can be one of:

- BTreeType: Btree index type.

- RTreeType: RTree index type.

- QuadTreeType: QuadTree index type.

- HashType: Hash index type.

Sequence

The Sequence class can be used to describe a sequence (or number generator, or autoincrement information). The sequence may belongs to the data source catalog and it can be associated to a given property of a specific dataset type.

Some Notes About DataSetType and Associated Classes

Note that some objects like DataSetType, DataSetTypeSequence, DataSetTypeIndex and others, have an internal id. This is supposed to be used by data access drivers and you should not rely on it in your code. Their values may change between program execution and has no meaning outside the data source implementation.

How to Use DataSet and DataSetType Classes

Once you have a DataSetType and a DataSet, do a loop over the DataSet and use the getXXX(i-th-column) methods such as getInt(i-th-column), getDouble(i-th-column):

DataSetType* dType = dataSet->getType(); std::size_t size = dType->size(); while(dataSet->moveNext()); { int ival = dataSet->getInt(0) double dval = dataSet->getDouble(1); te::gm::Geometry* g = dataSet->getGeometry(2); ... }

Indexed DataSet

<color red> TO BE DONE </color>

DataSetItem

A single element in a data collection represented by a dataset is called DataSetItem. A dataset can be viewed as a collection of dataset items. Each dataset item can be a set of primitive values (like an int or double) or even more complex data (structs and objects), which can be used to represent a single row of a database table, a single raster data, a collection of raster data or even other datasets.

In order to know what are the components of a dataset item you can query its type definition (DataSetType).

To retrieve the component values of a data item there are getter methods that can access safely the data using a specific data type.

As an example, see the Getter methods for POSTGIS datasource.

DataSourceCatalog

The DataSourceCatalog keeps the information about what data are stored in a data source. The data source catalog (catalog for brief) abstraction represents the data catalog of a data source. For each dataset in the data source the catalog keeps information about its structure (dataset type) and its relationship with other datasets. The TerraLib data source catalog caches only the desired descriptive information about datasets and no overhead in managing the catalog is imposed to the application.

The catalog contains just the data source structure and no its data. If you create a DataSetType and add it to the catalog this will not be reflected in the underlying repository. If you want to change or persist something, you should use the DataSetTypePersistence API that will reflect any modifications to the repository and will update the catalog of a given data source.

As can be seen in the above diagram all data source implementations provide the getCatalog() method for given access to the cached dataset schema if one exists. This cache can be controlled by the programmer or can be loaded all at once using the DataSourceCatalogLoader (see the getCatalogLoader in the DataSourceTransactor class). A catalog that belongs to a data source will have an explicit association to it. The method getDataSource can be used to access the data source associated to the catalog.

For now the catalog contains two types of entities: DataSetTypes and Sequences. There are a set of methods for inserting, retrieving and removing a dataset schema from the catalog as there are methods for dealing with sequences.

Each dataset schema or sequence stored in the catalog must have a unique name. This is the most important requirement because the catalog keeps an index of the datasets and sequences using their names. When the data source implementation is a database with multiple schema names, the dataset name may be formed by the schema name followed by a dot ('.') and then the table name. But it is also possible to use any other strategy since the informed name for a dataset is unique. The data source driver is responsible for defining an strategy.

Notice that a DataSourceCatalog is just an in-memory representation of the information in a data source. None of its methods changes the repository. So, take care when updating the catalog or objects associated to it manually. Prefer to use the DataSetTypePersistence API in order to make changes to a catalog associated to a given data source. The persistence will synchronize the catalog representation in main memory and the underlying physical repository.

When working with a DataSourceCatalog it is important to know:

- When adding a DataSetType that has any foreign keys, the DataSetType referenced by the foreign keys must be in the catalog. In other words, you can not insert a DataSetType in the catalog if all data referenced by it is not in the catalog yet.

- This is also important for sequences. If a sequence is owned by a property, the DataSetType associated to the property must be already in the catalog when you try to insert the sequence.

- When you remove a DataSetType from the catalog you can specify if any associated objects must also be dropped, like sequences and foreign keys in other DataSetTypes that are referencing the DataSetType been removed.

Besides these basic operations, there are a set of helper methods in the catalog that makes things easier when searching for related (or associated) datasets. The catalog keeps indexes for associated objects in order to be efficient for retrieving/updating them.

Other operations on a catalog:

getId: returns the catalog identifier. This value must be used just by data source driver implementeers. So, don't rely on its value!setId: sets the catalog identifier. This value must be used just by data source driver implementeers. So, don't rely on its value nor call it if you are not implementing a data source!setDataSource: should be used just by data source drivers implementaions in order to link the catalog to its data source.clear: clears the catalog, releasing all the resources used by it.clone: creates a copy (clone) of the catalog.

Note that none of the methods in the DataSourceCatalog class is thread-safe. Programmers must synchronize simultaneous reading and writing to the catalog.

The following code snippet shows how to traverse the dataset information cached in a data source catalog:

te::da::DataSourceCatalog* catalog = ds->getCatalog(); std::size_t ndsets = catalog->getNumberOfDataSets(); for(std::size_t i = 0; i < ndsets; ++i) { te::da::DataSetType* dt = catalog->getDataSetType(i); ... }

DataSourceTransactor

A DataSourceTransactor can be viewed as a connection to the data source for reading/writing things into it. For a DBMS it is a connection to the underlying system. Each data access driver is responsible for keeping (or not) a pool of connections.

Datasets can be retrieved through one of the transactor methods: getDataSet or query.

The transactor knows how to build some special objects that allows more refined interaction to the data source. Through the transactor it is possible to get:

- a catalog loader (DataSourceCatalogLoader) that helps searching for information on datasets stored in the data source.

- persistence objects (DataSetPersistence and DataSetTypePersistence) that can be used to alter a dataset structure or even to insert or update datasets.

- a prepared query(PreparedQuery) that can be used to efficiently execute a given query multiple times by replacing the parameters in the query.

- a batch command executor object (BatchExecutor) to submit commands in batch to the data source.

The DataSourceTransactor class is showed below:

Depending on its capabilities a transactor can support ACID transactions:

- the

beginmethod can be used to start a new transaction. - the

commitmethod may be used to confirm the success of a transaction. - the

rollBackmethod can be used to abort a transaction due to exceptions and any changes are rolled-back.

Another transaction can begin just after the current one is committe or rolled back.

Another important role of a transactor is for data retrieval. The methods getDataSet may be used to retrieve a dataset given its name (according to the data source catalog) and some hints about the returned data structure (traverse type and access policy). It is also possible to retrieve partially the data from a dataset using a geometric filter (envelope or geometry). Besides retrieving by name, it is possible to use a short-cut by the position of the dataset in the data source catalog, but in this case the data source catalog must have been loaded before.

The query methods can be used to execute a query that may return some data using a generic query or the data source native language.

The execute method executes a command using a generic query representation or the data source native language.

The getPrepared method creates a prepared query object (PreparedQuery).

The getBatchExecutor method creates an object (BatchExecutor)that can be used to group a collection of commands to be sent to the data source.

The getDataSetTypePersistence and getDataSetPersistence methods can be used to create objects that knows how to persist a DataSetType (and all its information) and DataSets in the data source.

The getCatalogLoader method gives you an object that can be used to retrieve the dataset schemas (DataSourceCatalogLoader).

The cancel method can be used for cancelling the execution of a query. This is useful in threaded environments.

The getDataSource method returns the parent data source of the transactor.

Some rules:

- Never release a transactor if you have opened datasets associated to it, this may cause errors in future access to the dataset objects. This also applies to prepared queries and batch command executors.

- a single application can have one or more transactors associated to a single data source or it can have many transactors with many different data sources.

- by default, every write operation through the transactor API is persisted (it is said that it is in an autocommit mode by default). The methods begin, commit and rollBack can be used for grouping operations in a single transaction.

- If you are planning a multi-thread application, it is better not to share the same transactor between threads because its methods are not thread-safe. Instead, use one transactor per thread otherwise you will have to synchronize yourself the access to it across the threads.

The following code snippet shows how to use the data source transactor to create a catalog loader and then find out the data organization in the data source:

// get a transactor to interact to the data source te::da::DataSourceTransactor* transactor = ds->getTransactor(); // in order to access the datasource catalog you need a DataSourceCatalogLoader te::da::DataSourceCatalogLoader* cl = transactor->getCatalogLoader(); // let's load the basic information about all datasets available in the data source! cl->loadCatalog(); //load only the dataset schemas // now all dataset schema information is on data source catalog te::da::DataSourceCatalog* catalog = ds->getCatalog(); // you can iterate over data source catalog to get a dataset schema std::size_t ndsets = catalog->getNumberOfDataSets(); for(std::size_t i = 0; i < ndsets; ++i) { te::da::DataSetType* dt = catalog->getDataSetType(i); // do what you want... ... }

DataSourceCatalogLoader

The DataSourceCatalogLoader allows to retrieve information about datasets. The methods in this class can be used to find out the dataset schemas (DataSetType) and these schemas describes how the datasets are organized in the data source.

Each data source catalog loader (called hereinafter as catalog loader for short) is associated with a transactor. The caller of getCatalogLoader method in the transactor is the owner of the catalog loader. This association is necessary because the catalog loader may use the transactor in order to retrieve information about the datasets from the data source. All methods in this class are thread-safe and throws an exception if something goes wrong during their execution.

The getDataSets method searches for the datasets available in the data source and returns a list with their names. Each dataset in a data source must have a unique name. For example, in a DBMS the name may contains the schema name before the table name (example: public.table_name).

The getDataSetType method retrieves information about a given dataset in the data source. Using this method you can get the following information about the dataset:

- the list of properties, including: name, data type, size, if the value is required or not, if it is an auto-increment

- primary key

- foreign keys

- unique keys

- check constraints

- indexes

The parameter fullin the above method tells if it is to retrieve the maximum information about the dataset or not. Note that no sequence information is retrieved for auto-increment properties nor the bounding box of the dataset will be retrieved. If you need these information see the getSequence and getExtent methods.

The getPrimaryKey method retrieves the dataset's primary key. If the dataset already has an index and the primary key is associated to it this method will make this association.

The getUniqueKeys method searches in the data source for unique keys associated to the given dataset and will update this information in the informed dataset type. As in the primary key case this method will try to associate the unique key to an existing index.

The getForeignKeys method searches for foreign key names defined over the informed dataset (dt). For each foreign key this method will retrieve its name and the related table name. This information is outputted in the fkNames and rdts lists.

Once you have the name of the foreign key and the dataset types related to it you can load the foreign key information through the method getForeignKey.

Information about indexes can be retrieved using the getIndexes method.

The getCheckConstraints method searches in the data source for check constraints associated to the given dataset.

The list of sequences in the data source can be found using the getSequences method and for each sequence you can retrieve its information through the getSequence.

Another important role of the catalog loader is to make things easier when having to know the bounding rectangle for a geometry property of a given dataset. The getExtent method can be used for this purpose. If you want to know the partial bouding box of a query or retrieved dataset, please, refer to the getExtent method in the DataSet class.

The method loadCatalog has an important role, it can load information about all the datasets at once and caches this information in the data source catalog. The parameter full will control how this method works.

The last methods in this class check the existence of the objects in the data source: datasetExists, primarykeyExists, uniquekeyExists, foreignkeyExists, checkConstraintExists, indexExists and sequenceExists.

The code snippet below shows the DataSourceCatalogLoader in practice:

// get a transactor to interact to the data source te::da::DataSourceTransactor* transactor = ds->getTransactor(); // in order to access the datasource catalog you need a DataSourceCatalogLoader te::da::DataSourceCatalogLoader* cl = transactor->getCatalogLoader(); // now you can get the dataset names in a vector of strings std::vector<std::string*> datasets; cl->getDataSets(datasets); // as you know the dataset names, you can get the dataset attributes (DataSetType) std::vector<std::string*>::iterator it; std::cout << "Printing basic information about each dataset" << std::endl; for(it = datasets.begin(); it < datasets.end(); it++) { const std::string* name = *it; te::da::DataSetType* dt = cl->getDataSetType(*name); std::cout << "DataSet Name: " << dt->getName() << " Number of attributes:" << dt->size() << std::endl; for(std::size_t i = 0; i < dt->size(); ++i) std::cout << dt->getProperty(i)->getName() << std::endl; delete dt; } // remember that you are the owner of dataset names and they are pointer to strings te::common::FreeContents(datasets); // release the catalog loader delete cl; // release the transactor delete transactor;

If you prefer you can load all datasets schema in a cache called DataSourceCatalog:

// get a transactor to interact to the data source te::da::DataSourceTransactor* transactor = ds->getTransactor(); // in order to access the datasource catalog you need a DataSourceCatalogLoader te::da::DataSourceCatalogLoader* cl = transactor->getCatalogLoader(); // let's load the basic information about all datasets available in the data source! cl->loadCatalog(); //load only the dataset schemas // now all dataset schema information is on data source catalog te::da::DataSourceCatalog* catalog = ds->getCatalog(); // you can iterate over data source catalog to get a dataset schema std::size_t ndsets = catalog->getNumberOfDataSets(); for(std::size_t i = 0; i < ndsets; ++i) { te::da::DataSetType* dt = catalog->getDataSetType(i); // do what you want... ... } // release the catalog loader delete cl; // release the transactor delete transactor;

How to get information about a specific dataset once you know its name? It is not necessary to load data source catalog and get the names of all of them. Use directly the methods below:

//NOTE: Using 'true' you are also getting primary key, foreign key, unique key, indexes, constraints te::da::DataSetType *dt = cl->getDataSetType("public.br_focosQueimada", true);

Data Persistence

There are two abstract classes that data access drivers must implement in order to allow efficient dataset creation (definition) and storage (insert, update, delete): DataSetTypePersistence and DataSetPersistence.

Each data access driver must implement these abstract classes considering its particular persistence support. The data source capabilities must inform applications about the persistence features provided by the driver.

DataSetTypePersistence

The DataSetTypePersistence class is an an abstract class that defines the interface for implementations responsible for persisting a dataset type definition in a data source.

The methods of this class realize changes on a dataset type into a data source and then synchronizes this information in the dataset type memory representation or even in the data source catalog if one is associated to it.

// TO BE DONE

DataSetPersistence

The DataSetPersistence class is an an abstract class that defines the interface for implementations responsible for persisting data from a dataset in a data source.

The methods of this class realize changes on a dataset into a data source. Items can be added to a dataset or removed from it through implementations of this class.

// TO BE DONE

Query

The data access module has a basic framework for dealing with queries. The Query classes can be used in order to abstract the developer from the specific dialect of each data access driver (or data source). You can write an object representation for your query that will be mapped into the correct SQL dialect by each driver. Each driver has to find a way to translate a query represented by an object instance of class te::query::Query to the appropriate SQL or sequence of operations. Some drivers can also use a base implementation in order to support queries.

Each data access driver must supply its query capabilities and its dialect in order to allow applications to inform what users should expect when working with the driver. This is also a strong requirement to allow the query processor module to be implemented on top of the data access.

The query classes are designed with compatibility with the OGC Filter Encoding specification. Applications can convert XML Filter Encoding documents from/to a query expression and then send the query to be processed. This is intend to make the life of application programmers easy.

The general Query classes are presented in the following class diagram:

The following code snippet shows a simple usage example:

te::query::PropertyName* property = new te::query::PropertyName("spatial_column"); te::query::Distance* d = new te::query::Distance(10.0, TE_KM); te::query::ST_DWithin* opBuff = new te::query::ST_DWithin(property, d); Where* w = opBuff; ... te::query::Select q(selectItems, from, w);

Expressions

The key class in a query is the Expression. It models an statement that can be evaluated, ranging from a Literal value (as an integer or a geometry) to a more complex function (like Substring or ST_Touches). There are lot of Expression subclasses. We provide some specializations for special functions like the spatial operators, the comparison operators, the arithmetic operators and the logic operators in order to be a shortcut when writing a batch code in C++. Look at the class diagram for getting a more comprehensive understanding:

DIAGRAMA EXPRESSION

The Select Operation

The Select class models a query operation and it has the following class diagram:

DIAGRAMA SELECT

In a select you can specify a list of output expressions using the Fields class:

DIAGRAMA FIELDS

The From class models a from clause and it can be used to inform the sources of data for the query as one can see in the class diagram:

DIAGRAMA FROM

The Where class models an expression that must be evaluated to true:

DIAGRAMA WHERE

The OrderBy class:

DIAGRAMA ORDER BY

The GroupBy class:

DIAGRAMA GROUP BY

Insert Operation

Update Operation

CreateDataSet Operation

DropDataSet Operation

How can you create a Query Operation?

Let's start by a Select query.

The first fragment below shows how to retrieve information about Brazil from the feature data set called country:

// output columns te::query::Fields* fds = new te::query::Fields; te::query::Field* col1 = new te::query::Field(new te::query::PropertyName("cntry_name")); te::query::Field* col2 = new te::query::Field(new te::query::PropertyName("the_geom")); fds->push_back(col1); fds->push_back(col2); // from te::query::From* from = new te::query::From; te::query::FeatureName* ftName = new te::query::FeatureName("country"); from->push_back(ftName); // Where te::query::Where* where = new te::query::Equal(new te::query::PropertyName("cntry_name"), new te::query::LiteralString(new std::string("Brazil"))); // The whole selection query te::query::Select q(fds, from, where, 0); // And finally the query execution te::da::Feature* feature = transactor->query(q);

Now, let's find all the countries with population greater than 100.000.000 in ascendenting order:

// output columns te::query::Fields* fds = new te::query::Fields; te::query::Field* col1 = new te::query::Field(new te::query::PropertyName("cntry_name")); te::query::Field* col2 = new te::query::Field(new te::query::PropertyName("the_geom")); fds->push_back(col1); fds->push_back(col2); // from te::query::From* from = new te::query::From; te::query::FeatureName* ftName = new te::query::FeatureName("country"); from->push_back(ftName); // Where te::query::Where* where = new te::query::GreaterThan(new te::query::PropertyName("pop_cntry"), new te::query::LiteralInt(100000000)); // Order By te::query::OrderByItem* oitem = new te::query::OrderByItem(new te::query::PropertyName("pop_cntry")); te::query::OrderBy* orderBy = new te::query::OrderBy; orderBy->push_back(oitem); // Finally... the query te::query::Select sql(fds, from, where, orderBy); te::da::Feature* feature = transactor->query(sql);

How are Query objects translated to an SQL Statement in a DBMS based data source driver?

The query module uses the visitor pattern1). The QueryVisitor class can be used by drivers to implement its own query interpreter.

As we intend to support several DBMS based data sources, we have a default implementation of this visitor. We call it SQLVisitor. This class implements an ISO compliant translator for the query object. But each DBMS dialect can be controlled by an SQLDialect object responsible for helping the SQLVisitor to translate the query to the target native language. See the class diagram that supports this basic implementation:

FIGURA QUERY DIALECT

In the above picture, each data access driver is responsible for providing its own SQL dialect. This dialect is used to translate an object query to its native language. For spatial database systems based data sources, this task can be quickly accomplished, you will need just to register the right dialect of SQL. You can use one of the following approaches: (a) registering the dialect by providing an XML file; (b) registering the dialect into the C++ code of the driver.

See the XML schema for the first approach… TO BE DONE

At this point you can ask: “And what about the data sources not based on a DBMS?”

Other kind of data sources need to do a different job, they need to interpret the query! And some of them just implement the basic methods of a query.

All data source publishes its query capabilities and this feature allows the application to choose the right way to interact with the data source driver. We have plans to build a query processor that will hide this kind of job from he applications. More information on the query processor can be seen here TO BE DONE.

Step by Step: How to Implement a Query in a DBMS based Data Source Driver

As we have discuss in the previous section, we have two different approaches:

- XML based

- C++ based

In the C++ based approach you can register the driver dialect in the initialization routine of your driver like:

te::query::SQLDialect* mydialect = new te::query::SQLDialect; mydialect->insert("+", new te::query::BinaryOpEncoder("+")); mydialect->insert("-", new te::query::BinaryOpEncoder("-")); mydialect->insert("*", new te::query::BinaryOpEncoder("*")); mydialect->insert("/", new te::query::BinaryOpEncoder("/")); mydialect->insert("=", new te::query::BinaryOpEncoder("=")); mydialect->insert("<>", new te::query::BinaryOpEncoder("<>")); mydialect->insert(">", new te::query::BinaryOpEncoder(">")); mydialect->insert("<", new te::query::BinaryOpEncoder("<")); mydialect->insert(">=", new te::query::BinaryOpEncoder(">=")); mydialect->insert("<=", new te::query::BinaryOpEncoder("<=")); mydialect->insert("and", new te::query::BinaryOpEncoder("and")); mydialect->insert("or", new te::query::BinaryOpEncoder("or")); mydialect->insert("not", new te::query::UnaryOpEncoder("not")); mydialect->insert("upper", new te::query::FunctionEncoder("upper")); mydialect->insert("lower", new te::query::FunctionEncoder("lower")); mydialect->insert("substring", new te::query::TemplateEncoder("substring", "($1 from $2 for $3)")); mydialect->insert("trim", new te::query::TemplateEncoder("trim", "(both $1 from $2)")); mydialect->insert("second", new te::query::TemplateEncoder("extract", "(second from $1)")); mydialect->insert("year", new te::query::TemplateEncoder("extract", "(year from $1)")); mydialect->insert("str", new te::query::TemplateEncoder("cast", "($1 as varchar)")); mydialect->insert("sum", new te::query::FunctionEncoder("sum")); mydialect->insert("st_intersects", new te::query::FunctionEncoder("st_intersects")); mydialect->insert("st_relate", new te::query::FunctionEncoder("st_relate")); mydialect->insert("st_transform", new te::query::FunctionEncoder("st_transform")); DataSource::setDialect(mydialect);

Notice that in this implementation we have a method called setDialect in the DataSource class. This is an specific static method from our implementation.

Then in the DataSourceTransactor implementation, you can have something like:

te::da::Feature* te::pgis::Transactor::query(const te::query::Select& q) { std::string sql; sql.reserve(128); te::query::SQLVisitor visitor(*(DataSource::getDialect()), sql); q.accept(visitor); return query(sql); }

This is all you need to have a working querying driver!

Step by Step: How to Create Your Own Visitor to Implement the Query Support

You can also have your own implementation of QueryVisitor. In this case, the easiest way is to create a subclass of te::query::FilterVisitor in your data source driver. Your visitor will implement the pure virtual methods from that base class. You will have something like this:

Look at the postgis data source implementation, you will find the files QueryVisitor.h and QueryVisitor.cpp (src/terralib/postgis). They implement the query visitor interface and it can be used as a good starting point.

Then, in the transactor class of your data source implementation, start implementing the methods that accepts a Query as the parameter. For example:

te::da::Feature* te::pgis::Transactor::query(te::query::Select& q) { std::string sql; sql.reserve(128); QueryVisitor visitor(sql); q.accept(visitor); // calling the query interface for the native language return query(sql); }

PreparedQuery

The PreparedQuery can be used to optmize some queries that are used repeatedly with the same structure (just the parameters values change from execution to execution). This object encapsulates some functionalities that most of today DBMSs provides: a way to implement queries that are parsed and planned just once and for each execution we provide just the new parameters.

// TO BE DONE

BatchExecutor

The BatchExecutor class models an object that submits commands in batch to the data source. This means that you can group a set of commands to be sent to the data source. A BatchExecutor can be obtained through the getBatchExecutor method in a DataSourceTransactor. The batch executor is influenced by how the transactor is set to handle transactions. When in autocommit mode (without an explicit transaction in curse), it will update the data source after the execution of each command and if the transactor has started a transaction it will modify the data source when the transactor commit method is called.

// TO BE DONE

ConnectionPoolManager

<color red> TO BE DONE </color>

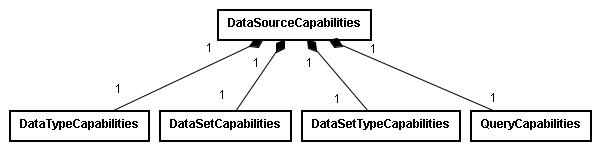

Common Data Source Capabilities

Each data source provides a set of common capabilities:

Besides the common capabilities each driver can also provides specific capabilities that must be handle by special code.

Below some capabilities are described. To access all the details about capabilities click here.

DataSourceCapabilities Class

The DataSourceCapabilities class allows to retrieve:

- Access Policy: tells the data source access policy. It can be one of the following values:

NO_ACCESS: no access allowed;R_ACCESS: Read-only access allowed;W_ACCESS: Write-only access allowed;RW_ACCESS: Read and write access allowed.

- Transaction Support: if

TRUEthe transactor support ACID transactions. In other words, the methods begin, commit and rollback (of a DataSourceTransactor) have effect. IfFALSE, calling these methods will perform nothing. - DataSetPersistence Capabilities: if

TRUEthe data source has support to the DataSetPersistence API. IfFALSEthe data source doesn't support this API. - DataSetTypePersistence Capabilities: if

TRUEthe data source has support to the DataSetTypePersistence API. IfFALSE, the data source doesn't support this API. - Prepared Query Capabilities: if

TRUEthe data source has support to the PreparedQuery API. IfFALSE, the data source doesn't support this API. - Batch Executor Capabilities: if

TRUEthe data source has support to the BatchExecutor API. IfFALSE, the data source doesn't support this API. - Specific Capabilities: a list of key-value-pairs that data source drivers can supply with specifics capabilities.

DataTypeCapabilities Class

The DataTypeCapabilities class allows to know if a data source supports a specifc data type. For instance, some drivers supports boolean types and others don't. Each data source must tell, at least, if it supports the following data types:

BIT: ifTRUEthe data source supports bit types ifFALSEthis type is not supported by the data source;CHAR: ifTRUEthe data source supports char types ifFALSEthis type is not supported by the data source;UCHAR: ifTRUEthe data source supports unsigned char types ifFALSEthis type is not supported by the data source;INT16: ifTRUEthe data source supports int types 16-bit long ifFALSEthis type is not supported by the data source;UINT16: ifTRUEthe data source supports unsigned int types 16-bit long ifFALSEthis type is not supported by the data source;INT32: ifTRUEthe data source supports int types 32-bit long ifFALSEthis type is not supported by the data source;UINT32: ifTRUEthe data source supports unsigned int types 32-bit long ifFALSEthis type is not supported by the data source;INT64: ifTRUEthe data source supports int types 64-bit long ifFALSEthis type is not supported by the data source;UINT64: ifTRUEthe data source supports unsigned int types 64-bit long ifFALSEthis type is not supported by the data source;BOOLEAN: ifTRUEthe data source supports boolean types (true or false) ifFALSEthis type is not supported by the data source;FLOAT: ifTRUEthe data source supports float point numbers 32-bit long ifFALSEthis type is not supported by the data source;DOUBLE: ifTRUEthe data source supports float point numbers 64-bit long ifFALSEthis type is not supported by the data source;NUMERIC: ifTRUEthe data source supports arbitrary precision data types ifFALSEthis type is not supported by the data source;STRING: ifTRUEthe data source supports string data types ifFALSEthis type is not supported by the data source;BYTE ARRAY: ifTRUEthe data source supports byte array (BLOB) data types ifFALSEthis type is not supported by the data source;GEOMETRY: ifTRUEthe data source supports geometric data types ifFALSEthis type is not supported by the data source;DATETIME: ifTRUEthe data source supports date an time data types ifFALSEthis type is not supported by the data source;ARRAY: ifTRUEthe data source supports array of homogeneous elements ifFALSEthis type is not supported by the data source;COMPOSITE: ifTRUEthe data source supports compound data types ifFALSEthis type is not supported by the data source;RASTER: ifTRUEthe data source supports raster data types ifFALSEthis type is not supported by the data source;DATASET: ifTRUEthe data source supports nested datasets ifFALSEthis type is not supported by the data source;XML: ifTRUEthe data source supports XML types ifFALSEthis type is not supported by the data source;COMPLEX: ifTRUEthe data source supports a complex number (integer or float types) ifFALSEthis type is not supported by the data source;POLYMORPHIC: ifTRUEthe data source supports polymorphic types ifFALSEthis type is not supported by the data source;

A data source can also give a hint about data type conversion. The method getHint can be used to know if the driver suggests some conversion (BOOL → INT32).

DataSetTypeCapabilities Class

This class informs what kind of constraints and indexes are supported by a given data source. The basic set of well known capabilities are:

PRIMARY KEY:TRUEif the data source supports primary key constraints otherwiseFALSE.UNIQUE KEY:TRUEif the data source supports unique key constraints otherwiseFALSE.FOREIGN KEY:TRUEif the data source supports foreign key constraints otherwiseFALSE.SEQUENCE:TRUEif the data source supports sequences otherwiseFALSE.CHECK CONSTRAINTS:TRUEif the data source supports check-constraints otherwiseFALSE.INDEX:TRUEif the data source supports some type of index otherwiseFALSE.RTREE INDEX:TRUEif the data source supports r-tree indexes otherwiseFALSE.BTREE INDEX:TRUEif the data source supports b-tree indexes otherwiseFALSE.HASH INDEX:TRUEif the data source supports hash indexes otherwiseFALSE.QUADTREE INDEX:TRUEif the data source supports quad-tree indexes otherwiseFALSE.

DataSetCapabilities Class

This set of information can be used to get know what the dataset implementation of a given data source can perform. The well known capabilities are:

BIDIRECTIONAL DATASET: ifTRUEit supports traversing the dataset in a bidirectional way otherwiseFALSE.RANDOM DATASET: ifTRUEit supports traversing the dataset in a bidirectional way otherwiseFALSE.INDEXED DATASET: ifTRUEit supports traversing the dataset using a given key otherwiseFALSE.EFFICIENT_MOVE_PREVIOUS: ifTRUEthe performance of move previous has no penality otherwise (FALSE) this operation can took some computation effort.EFFICIENT MOVE BEFORE FIRST: ifTRUEthe performance of move before first has no penality otherwise (FALSE) this operation can took some computation effort.EFFICIENT MOVE LAST: ifTRUEthe performance of move last has no penality otherwise (FALSE) this operation can took some computation effort.EFFICIENT MOVE AFTER LAST: ifTRUEthe performance of move after last has no penality otherwise (FALSE) this operation can took some computation effort.EFFICIENT MOVE: ifTRUEthe performance of move has no penality otherwise (FALSE) this operation can took some computation effort.EFFICIENT DATASET SIZE: ifTRUEthe performance of getting the dataset size has no penality otherwise (FALSE) this operation can took some computation effort.DATASET INSERTION: ifTRUEthe dataset API allows one to add new items.DATASET UPDATE: ifTRUEthe dataset API allows to update a dataset in the cursor position.DATASET DELETION: ifTRUEthe dataset API allows one to erase a dataset in the cursor position.

QueryCapabilities Class

An object from this class can be used to inform the query support in a given data source implementation.

SQL DIALECT: IfTRUEthe data source support the Query API otherwiseFALSE.INSERT QUERY: IfTRUEthe data source support the insert command otherwiseFALSE.UPDATE QUERY: IfTRUEthe data source support the update command otherwiseFALSE.DELETE QUERY: IfTRUEthe data source support the delete command otherwiseFALSE.CREATE QUERY: IfTRUEthe data source support the create command otherwiseFALSE.DROP QUERY: IfTRUEthe data source support the drop command otherwiseFALSE.ALTER QUERY: IfTRUEthe data source support the alter command otherwiseFALSE.SELECT QUERY: IfTRUEthe data source support the select command otherwiseFALSE.SELECT INTO QUERY: IfTRUEthe data source support the select into command otherwiseFALSE.

Data Source Capabilities Example

The code snippet below shows how to retrieve some capabilities from a data source:

// retrieves the data source capabilities const te::da::DataSourceCapabilities& capabilities = ds->getCapabilities(); // let's print the informed capabilities... std::cout << "== DataSource Capabilities ==" << std::endl; << " - Access Policy: "; switch(capabilities.getAccessPolicy()) { case te::common::NoAccess: std::cout << "NoAccess (No access allowed)" << std::endl; break; case te::common::RAccess: std::cout << "RAccess (Read-only access allowed)" << std::endl; break; case te::common::WAccess: std::cout << "WAccess (Write-only access allowed)" << std::endl; break; case te::common::RWAccess: std::cout << "RWAccess (Read and write access allowed)" << std::endl; break; } std::cout << "Support Transactions: " << capabilities.supportsTransactions(); std::cout << "Support Dataset Persitence API: " << capabilities.supportsDataSetPesistenceAPI(); std::cout << "Support Dataset Type Persitence API: " << capabilities.supportsDataSetTypePesistenceAPI(); std::cout << "Support Prepared Query API: " << capabilities.supportsPreparedQueryAPI(); std::cout << "Support Batch Executor API: " << capabilities.supportsBatchExecutorAPI(); std::cout << ":: DataType Capabilities" << std::endl; const te::da::DataTypeCapabilities dataTypeCapabilities = capabilities.getDataTypeCapabilities(); std::cout << "Support INT32: " << dataTypeCapabilities.supportsInt32(); std::cout << ":: DataSetType Capabilities" << std::endl; const te::da::DataSetTypeCapabilities dataSetTypeCapabilities = capabilities.getDataSetTypeCapabilities(); std::cout << "Support Primary Key: " << dataSetTypeCapabilities.supportsPrimaryKey(); std::cout << ":: DataSet Capabilities" << std::endl; const te::da::DataSetCapabilities dataSetCapabilities = capabilities.getDataSetCapabilities(); std::cout << "Support Traversing in a Bidirectional way: " << dataSetCapabilities.supportsBidirectionalTraversing(); std::cout << ":: Query Capabilities" << std::endl; const te::da::QueryCapabilities queryCapabilities = capabilities.getQueryCapabilities(); std::cout << "Support SQL Dialect: " << queryCapabilities.supportsSQLDialect(); std::cout << "Support SELECT command: " << queryCapabilities.supportsSelect();

Utility Classes

ScopedTransaction

ScopedTransaction is an utitily class to help coordinate transactions. This class can be used to create an object that automatically rollback a transaction when it goes out of scope. When the client has finished performing operations it must explicitly call the commit method. This will inform ScopedTransaction to not rollback when it goes out of scope.

DataSourceInfo and DataSourceInfoManager

DataSourceInfo is a conteiner class for keeping information about a data source. This class splits the concept of data source from data access drivers.For example, at application level one can have a data source named File that uses diferent drivers to access data. It will depend on user preferences and dialogs used to create the data source.

DataSourceInfoManager is a singleton to keep all the registered data sources informations.

Adapters

DataSet Adaptor <color red> TO BE DONE </color>

DataSetAdapter

RasterDataSetAdapter

DataSetRasterAdapter

A Short Note About OGC and ISO Standards

From Theory to Practice

Module Summary

------------------------------------------------------------------------------- Language files blank comment code scale 3rd gen. equiv ------------------------------------------------------------------------------- C++ 104 2016 2120 4757 x 1.51 = 7183.07 C/C++ Header 114 5125 8952 3538 x 1.00 = 3538.00 ------------------------------------------------------------------------------- SUM: 218 7141 11072 8295 x 1.29 = 10721.07 -------------------------------------------------------------------------------

Besides the C++ code there is also some XML schemas (xsd) for some elements:

- Query

Final Remarks

- It is allowed to have more than one concrete factory per data access driver implementation

- Data Access drivers may implement more than one driver. One of the following strategies can be used:

- the driver may rely on special parameters to create facades to each implementation

- the driver resgiters different concrete factories

- The list of connection parameters for an specific implemention has no restrictions.

- It is possible to have driver specializations (fo example: MySQL → MySQL++).

- Add the ability to work with compressed geometries in a driver based only in WKBs.

- DataSetTypeCheckConstraint deve usar a sintaxe de expressoes caso contrário nao tem sentido!

- Criar o tipo numeric e substituir no dataset

- Criar o tipo Array e substituir os métodos getArray

- Se pensamos em ser ainda mais extensiveis, teremos que trocar as classes visitors pois hoje elas definem quem pode ser visitado como métodos fixos. Mas isso pode ficar para uma etapa bem no futuro pois nao impossibilitara nem causara efeitos colaterais no desenvolvimento da TerraLib. Neste caso poderíamos ter uma fábrica de visitors e cada driver poderia registrar seus objetos concretos que saberiam criar os visitors! Isto vale para: FilterVisitor, FilterCapabilitiesVisitor, SortByVisitor.

- Mostrar como a pessoa pode estender a lista de operadores binarios!

- Remover o uso do atributo nome na classe Function de forma que as funcoes sejam registradas e de forma que se possa manter um pool das funcoes com os argumentos previamente alocados para cada tipo de funcao.

- Tem uma decisão que é difícil de ser tomada… o Filter é legal mas poderia ser mais simples… talvez fosse melhor ter um arranjo diferente… um Query independente do Filter e um tradutor de Filter para Query! Por exemplo, a ordem do SortBy só pode ter uma propriedade e não pode ser uma expressão mais geral como uma função… isto talvez limite algum SGBD!

- Podemos agilizar a tradução do objeto query para o dialeto SQL correto através do uso de encoders sql. Desta forma é possível sermos mais dinâmicos e ainda termos eficiência.

- Neste caso, cada driver registra para um nome de função o seu objetos formatador, que será usado para transformar a função em SQL.

- Podemos entregar a implementação de um visitor SQL que já deixa 99% encaminhado, dependendo apenas do projetista do driver fazer a ingestão do mapeamento da funções e dos formatadores. Isso poderá ser feito por um arquivo XML que cada driver deverá entregar junto!

- Tem espaco para muita coisa numa implementacao:

- parametros de funcao podem ser de E/S;

- podemos ter uma classe para operadores e eles podem dizer como eh a notacao: prefixa, posfixa ou infixa

- podemos ter um catalogo de funcoes comuns a todos SGBDs

- podemos ter um outro catalogo de funcoes por data source que diz as funcoes particulares do data source

- Lembrar que os tipos de retornos de funcoes podem ate ser SET OF (TIPO)

- Nas classes BinarySpatialOp e BBOX precisamos usar um envelope que contenha SRS!

- Podemos ate pensar em dividir cada hierarquia de classe em um visitor, mas no final das contas… ter uma só vai ser bem mais simples e eficiente pois sempre vamos precisar atravessar mais de uma hierarquia!!!!!!

- Se quizermos podemos nos dar ao deboche de agrupar as funcoes por categorias: UnariaLogica/UnariaEspacial como o pessoal do OGC fez no Filter… mas isso pra mim eh irrelevante!

- No futuro talvez seja interssante incluir um parametro dizendo se o operador eh: prefix ou posfix. POr enquanto, nao achei nada que necessite disso!

- Os tipos informados pelo driver precisam ser refinados, ao invés de um true deveria ter uma lista dos subtipos suportadis (caso de string, data e hora, geometry).